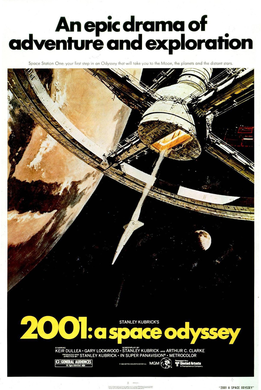

I saw the film “2001, A Space Odyssey” when I was about 8; I was far too young to understand it but now I understand why many saw it as being prophetic. Amongst other things, it concerns a rogue computer, Hal, who has been programmed to ensure the success of a space mission. Hal decides the human crew may be imperilling the mission and so it follows its own logic by seeking to kill them. This week saw the announcement from the US Defence Department that a drone, equipped with artificial intelligence to strike a target, would in all probability kill its own operator if the individual sought to recall it. Computers programmed with artificial intelligence have great potential for good; I work with a colleague essentially using a simple form of this to design new drugs. However, the experts in the field are worried for a reason; how do we programme in morality and ethics? I suspect this is a problem which can be solved, at least to a degree, but if this is done, what then is the status of the computer? Would it fully replicate the way a human would behave, at least one driven by pure logic? In the film, 2001, Hal pleaded with the surviving astronauts not to switch it off, to spare its “life”.

Some years before “2001” was made, a scientist-priest, Teilhard de Chardin, also pondered what makes us human. He wrote “We are not human beings having a spiritual experience. We are spiritual beings having a human experience”. I wonder if it this that ultimately will separate us from intelligent, even moral, computers?